Introduction

Before we dive into the concepts of concurrency, parallelism, multi-processing, and distributed systems, let’s warm up with some simple examples.

You can run the following code snippet on your browser, thanks to

Pyodide, a JavaScript port of Python for the web. Since it can only print to console.log, we have made some modifications to see the output returned from the code block, just like in a Jupyter notebook.The code defines a function that takes the length of a list (

n) as an argument and returns the time taken to create this list.Looking at the output, we can see that there is a relationship between the size of the list and the time taken to create it. As the size increases, so does the time. This makes sense, because more elements mean more operations.

But what if we want to create a very large list, or perform some complex operations on it? Doing it linearly, one after the other, might take too long. Is there a better way to do things? Maybe we can use more processing power to speed things up, or do something else while this operation is running?

Let’s try a more challenging task (we don’t recommend you to run this, as it might crash your browser) -

In this task, we are trying to find an item (that doesn’t exist) in a list of a given size. We are also measuring how much time it takes to do this. As you can imagine, this will take a very long time for any machine! This illustrates why we need to learn about high-performance computing.

In today’s era of big data and artificial intelligence, high-performance is essential. We want our data to be streamed quickly and efficiently. We don’t want our YouTube video to buffer because there are too many viewers. We don’t want to miss an online event or have a live stream interrupted. And we certainly don’t want our multiplayer game to lag when we are in a close battle with our opponent.

Many domains depend on high-performance computing, such as scientific computing (climate modeling, genomics, physics simulations), big data analytics (web search, social media, recommender systems), artificial intelligence (machine learning, computer vision, natural language processing), and more. They all require handling the increasing complexity and scale of data and computations.

To achieve high-performance computing, we need to design and develop systems that can leverage multiple resources and coordinate them efficiently. And that’s what we are going to learn in this article. We will start from the basics and build our way up to more advanced topics.

We will cover the following concepts: concurrency, parallelism, multi-processing and distributed systems. We will explain what they mean, how they differ from each other, and why they are important for high-performance computing.

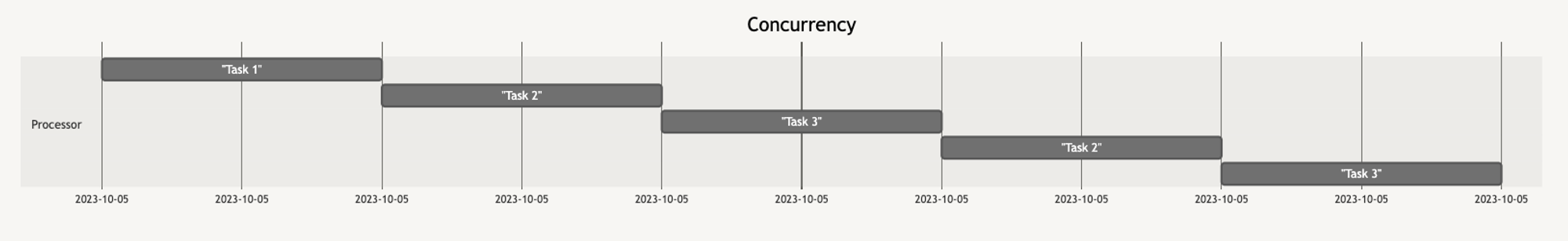

Concurrency

- Concurrency is the ability of a system to perform multiple tasks or computations at the same time or in an overlapping manner. This means that a system can work on more than one task at a given instance, and ensure that it completes both the tasks eventually.

- However, concurrency does not necessarily imply parallelism. A system can be concurrent without being parallel, if it switches between the tasks quickly enough to give the illusion of simultaneous execution. This is called interleaving or time-slicing.

- Since multiple tasks are allocated and deallocated resources very often, the system needs to keep track of each task’s context or memory. This can introduce some overhead and complexity in the system.

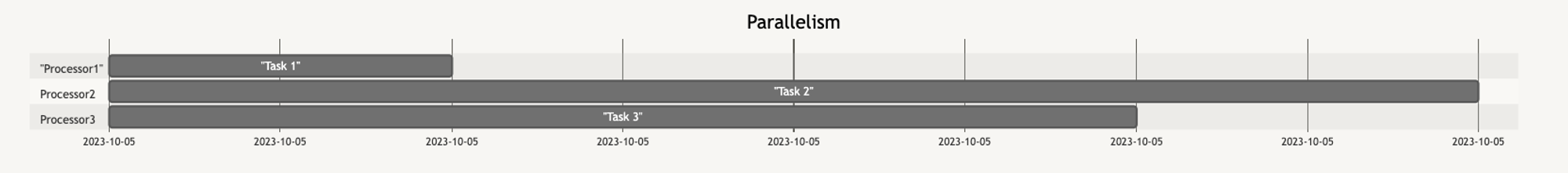

Parallelism

- Parallelism is a special case of concurrency where multiple tasks or computations are executed simultaneously on different processing units or resources. Parallelism can improve the performance and efficiency of a system by exploiting the inherent parallel nature of data and computations.

- For example, if we have two different tasks that are independent and can run on their own, and we also have two processors, why should we run them on only one processor? Why not utilize both processors and run them in parallel?

- Parallelism can be achieved at different levels, such as bit-level, instruction-level, data-level, or task-level. Depending on the level of parallelism, different hardware and software techniques can be used to implement it.

Multi-Processing

Quick note on Multi-Processing vs Parallelism

- Multiprocessing is a specific way of achieving parallelism by using multiple processors or cores within a single system.

- Parallelism is a more general concept that refers to executing multiple tasks or computations simultaneously on different processing units or resources, which could be within a single system or across multiple systems.

- Multiprocessing is the use of multiple processors or cores within a single system to execute concurrent or parallel tasks or computations. Multiprocessing can be achieved by using multi-core processors, multi-processor systems, or hybrid systems that combine both.

- Multiprocessing can offer several benefits, such as increased performance, scalability, fault-tolerance, and resource utilization. However, it also poses several challenges, such as synchronization, communication, load balancing, and consistency.

- We will explore some of these challenges and how to overcome them in our later sections.

Distributed systems

- Distributed systems are systems that consist of multiple components located on different machines that communicate and coordinate their actions by passing messages. Distributed systems can be used to achieve concurrency or parallelism by utilizing multiple machines or clusters of machines as processing units or resources.

- Distributed systems can enable solving problems that are too large or complex for a single machine. They can also provide higher availability, reliability, and scalability than centralized systems. However, they also introduce new challenges, such as network latency, partial failure, security, and consensus.

- We will also discuss some of these challenges and how to deal with them in our later sections.

In the following chapters we will be exploring each of the above sections in a very in-depth manner. For example we may cover most of the below -

- Concurrency: We will learn what concurrency is and why it is important for high-performance computing. We will also learn how to achieve concurrency in Python using various modules and techniques, such as threads, runnables, synchronization tools, concurrent.futures, asyncio, threading, queue, and contextvars. We will also explore some common concurrency problems and how to avoid or solve them.

- Parallelism: We will learn what parallelism is and how it differs from concurrency. We will discuss about different types of parallelism such as bit-level, instruction-level, data-level, or task-level. Depending on the level of parallelism, different hardware and software techniques can be used to implement it.We will also discuss some challenges and benefits of parallelism.

- Multiprocessing: We will learn what multiprocessing is and how it can improve the performance and efficiency of our Python programs. We will also learn how to use the multiprocessing module to create processes and pools of workers, share memory and state, communicate and synchronize between processes, and handle errors and exceptions. We will also compare multiprocessing with threading and explore some trade-offs and challenges of multiprocessing.

- Distributed systems: We will learn what a distributed system is and how it works. We will also learn about some examples and challenges of distributed systems. We will also build a distributed KV store. This will be the biggest blog we’ve worked on so far!

Stay tuned for more!