ProcessesWhat are Processes:Why Should We Care About Processes:How it Impacts Concurrency/Parallelism:Where Can I See This Happen:

Processes

In the world of operating systems and concurrent computing, processes are foundational units of execution. They are independent and fundamental components that run programs and manage associated resources. Each process possesses its unique memory space, system resources, and execution environment, providing isolation and encapsulation.

What are Processes:

- Processes are independent and fundamental units of execution in an operating system.

- They are running programs and their associated resources.

- Each process has its own memory space, system resources, and execution environment.

- The creation of processes is typically initiated by the operating system using system calls like

forkorexec. Theforksystem call creates a new process as a copy of the parent process, whileexecloads a new program into the current process. Processes can also be terminated explicitly through theexitsystem call or due to exceptional circumstances.

- They can run concurrently, allowing multiple tasks to be executed simultaneously, making them the building blocks of multitasking systems.

Why Should We Care About Processes:

Understanding processes is fundamental because they provide essential isolation and encapsulation. This isolation is crucial for system stability and security. Processes ensure that one process's failure or misbehavior doesn't directly impact others, enhancing the robustness of the system. They form the foundation of security by enabling sandboxing and process-level permissions, safeguarding sensitive data and the system's integrity.

- Concurrency and Processes: Processes are key components in achieving concurrency. They enable the execution of multiple independent tasks or programs concurrently on a single or multiple CPUs. Concurrency enhances system efficiency, responsiveness, and resource utilization. Processes are used by the operating system to isolate and manage these concurrently running tasks, ensuring they don't interfere with each other.

- Impact on Performance: The effective management of processes is crucial for optimizing system performance. While processes facilitate concurrency, they also introduce overhead due to context switching and inter-process communication. Efficient interrupt handling and context switching are essential to minimize this impact. For example, in Linux, the Completely Fair Scheduler (CFS) is an example of a scheduling algorithm designed to optimize task switching and minimize performance overhead.

- Operating System's Role: The operating system plays a central role in managing processes and achieving concurrency. Whenever the operating system is supposed to swap out a process and bring in another one into execution, it depends heavily on the usage of processes. Interrupts, which can generate context switches, are signals that cause the CPU to suspend the current task and jump to a special routine called an interrupt handler that deals with the interrupt. Interrupts can be generated by hardware devices or software programs when they need urgent attention from the CPU, such as input/output operations, network packets, timers, or system calls. The operating system coordinates this process to ensure that all tasks receive their fair share of CPU time, contributing to system efficiency and responsiveness.

How it Impacts Concurrency/Parallelism:

Processes can both facilitate and challenge parallelism. They enable the execution of multiple independent tasks in parallel, enhancing overall system throughput, especially on multi-core processors. However, managing multiple processes introduces overhead due to context switching and inter-process communication. Effective process management and scheduling are essential to maximize the benefits of parallel execution while mitigating this overhead. Some of the factors with respect to processes and concurrency

- Number of Processes:

- The number of physical cores on a CPU plays a crucial role in determining the level of parallelism that can be achieved with processes. More cores allow for a higher degree of parallel execution.

- However, if there are too many processes competing for CPU time, the operating system's scheduler may spend significant resources managing context switches, which can introduce overhead. Balancing the number of active processes to match the available cores is essential to maximize parallelism.

- Memory Allocation:

- The available physical memory (RAM) on the system impacts how many processes can run simultaneously without incurring performance penalties due to excessive paging.

- The operating system is responsible for managing memory allocation. When there is insufficient physical memory to accommodate all active processes, the OS relies on virtual memory techniques like paging, which can introduce additional latency and impact parallelism. Efficient memory management is critical to prevent excessive swapping and maintain parallel execution.

- Context Switching:

- The CPU's design and cache architecture capabilities can influence the efficiency of context switching. Hardware-level support for context switching, like multiple register sets or reduced context switch time, can enhance parallelism.

- Excessive context switching can reduce parallelism as it consumes CPU cycles for management tasks. The operating system's scheduling algorithm, context switch time, and the number of processes in the system all impact how efficiently context switching is handled.

- I/O and Disk Speed:

- The speed and type of storage devices, such as hard drives or solid-state drives, impact the speed at which processes can perform I/O operations. Faster I/O devices can reduce I/O-related bottlenecks.

- The operating system's I/O scheduling and buffering mechanisms can influence how well processes can overlap I/O operations with computation, thus affecting parallelism.

- CPU Architecture and Features:

- Modern CPU architectures include features like hyper-threading, simultaneous multi-threading (SMT), and out-of-order execution. These features can enhance parallelism by allowing more threads to execute simultaneously.

- The operating system's ability to take advantage of these CPU features, such as scheduling threads on hyper-threaded cores effectively, impacts parallelism.

- Process Prioritization:

- The operating system's process priority management affects parallelism.

- High-priority processes may monopolize CPU time, while lower-priority processes may be starved, impacting parallelism. Balancing process priorities is critical.

- System Load and Resource Usage:

- The overall system load and resource usage impact the degree of parallelism. If other processes consume significant resources, there may be fewer resources available for parallel execution.

- Kernel and Scheduler Efficiency:

- The efficiency of the kernel's scheduler and management of system resources play a significant role in parallelism. An efficient scheduler can maximize the utilization of available CPU cores, while an inefficient one can introduce delays and reduce parallelism.

Understanding how these factors interact with hardware and the operating system is essential for optimizing parallelism. A well-tuned system that carefully manages processes, memory, I/O, and other resources can achieve higher levels of parallel execution and, as a result, better performance for parallel workloads.

Where Can I See This Happen:

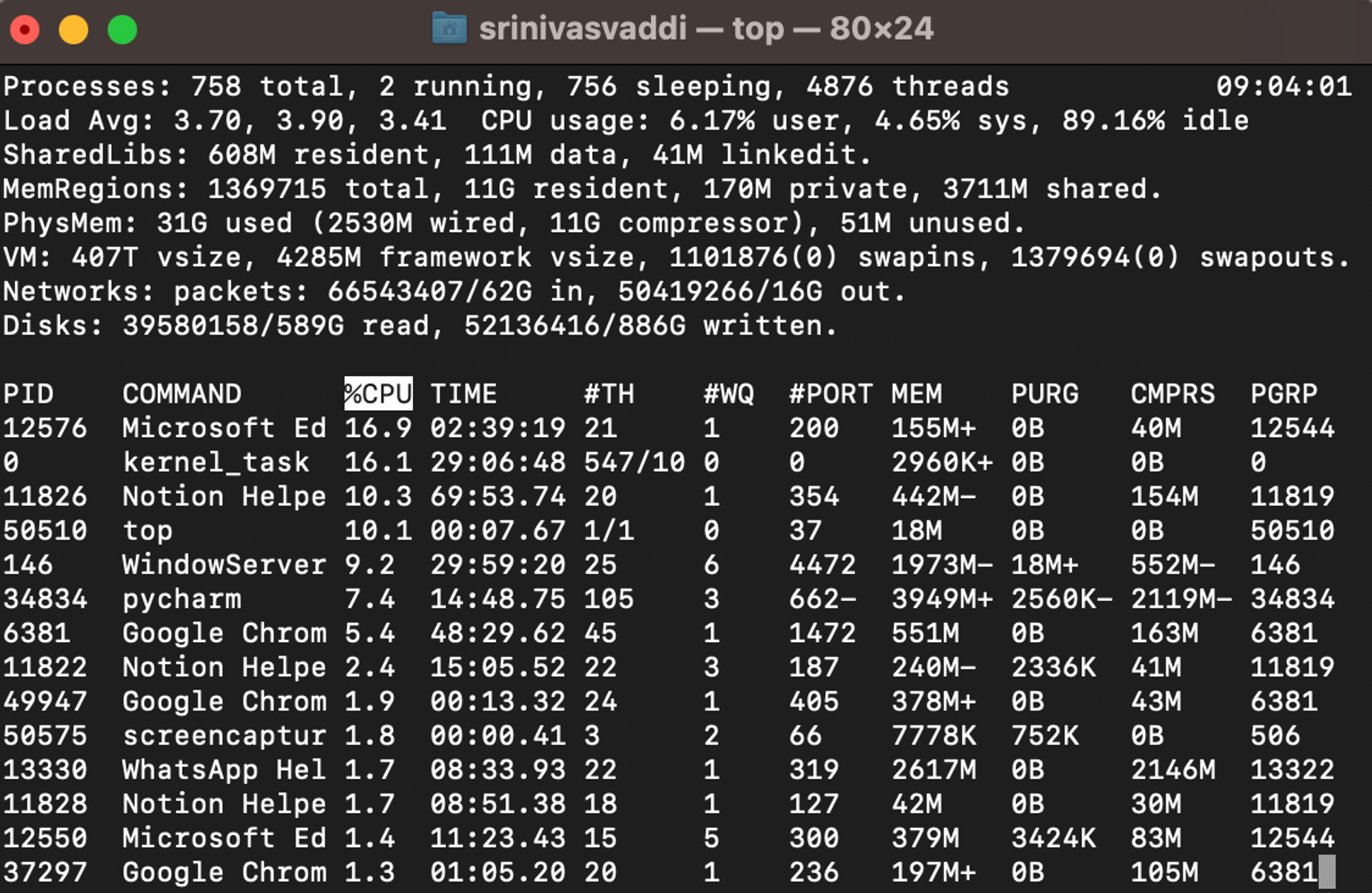

- Monitoring with

psandtop: - Linux Commands: The

pscommand is a powerful tool for displaying information about running processes. For example,ps auxprovides a detailed list of all processes on the system. Thetopcommand offers a real-time, dynamic view of processes, system resource usage, and system load. These tools help you identify the most resource-intensive processes. - Many Linux distributions maintain system logs that record process-related events. The

/var/logdirectory contains various log files. For instance,/var/log/syslogand/var/log/messagesoften include information about system processes.

- Analyzing Process Statistics:

- The

pidstatcommand provides detailed process statistics, including CPU and memory usage. For example,pidstat -ushows CPU statistics for processes, andpidstat -rdisplays memory-related statistics. - Running

pidstatwith specific options allows you to gather detailed information about individual processes and their resource consumption. This helps in identifying processes that may be consuming an excessive amount of system resources. - Process statistics and resource usage information can be logged by system monitoring tools. You can set up tools like Prometheus and Grafana to collect and visualize process-related data over time.

- Performance Analysis Tools:

- Linux Commands: Linux offers a range of performance analysis tools like

perf,strace, andvmstat. These tools can be used to trace system calls, analyze system performance, and monitor resource utilization. - Operations: By using these performance analysis tools, you can dive deep into process behavior and system performance. For example, you can use

straceto trace system calls made by a process, helping you understand its interactions with the system.vmstatprovides information about system-wide memory and CPU statistics. - Logs: Performance analysis tools often generate logs that can be saved for further analysis. These logs can reveal details about the system's behavior during specific operations.

By employing these Linux commands and tools, performing general monitoring operations, and referring to system logs, you can effectively observe processes in action, identify multiprocessing, and assess its efficiency or issues. This level of monitoring and analysis is crucial for system administrators and developers to ensure optimal system performance and resource utilization.